- Global demand for data centre space is set to increase significantly and the compute density of GenAI significantly increases the electricity required to keep computer capacity cool.

- Real estate is a key determinant of a successful data centre, with data centres having specific locational requirements making land with access to electricity a crucial enabler for data centres.

- In our opinion, the DC megatrend tail wind may remain supportive for returns to equity investors over time.

Why are data centres likely to become even hotter sheds?

The launch of Chat GPT and other large language GenAI models has added to the already hot demand for data centres. Currently there are 150 million Chat GPT users globally making 1.7 billion enquiries (questions) per day. DC operator, Australian-listed Next DC, estimates that, in the next three years, Chat GPT’s user base will increase tenfold to 1.5B users making 17B enquiries per day.

DCs house networked computer servers and are typically used by organisations for the remote storage, processing, and distribution of large amounts of data. DCs themselves are big, sophisticated industrial warehouses with access to lots of electricity and data cables – they are hot sheds. The ultra-fast uptake of GenAI and ML significantly increases data storage and processing requirements, adding demand to the already strong growth in DC demand from cloud computing. GenAI and ML is also a higher density compute model, requiring more cooling and hence more electricity to power the cooling than traditional data centre users. As a result, control of electricity is key to data centres.

Data centres are not new. But what is changing is the volume of data now being generated and the intensity of the use of DCs. Data centres are key enablers to the third wave of the digital revolution, artificial intelligence (AI). Real estate owners with land that has access to the requisite power are ideal facilitators of this digital infrastructure mega trend.

Strong demand profile

The digital revolution, the third industrial revolution, which began in the 1970s is entering its own third wave: artificial intelligence. While the first wave of enterprise computing and storage was located on the users’ premises, the second wave of cloud computing has pushed computing and storage offsite to specialised data centres/warehouses, while the third wave of the digital revolution, in the form of artificial intelligence is accelerating the amount of data processed and stored at geometrically progressing speed, placing huge pressure on DC capacity.

GenAI and ML involve the collection, processing, storage and lightning speed access of huge amounts of data, with that data stored in DCs. Research by global investment bank, Morgan Stanley, indicates that the DC market could quintuple globally over the next 10 years driven by continued migration of data to the cloud, geometric growth in GenAI and ML applications and increased data sovereignty, which will see more data processed and stored outside the US.

Research indicates we may be only 50% of the way globally through the digitalisation of information workloads and the storage of data output in the cloud (whether private or public cloud). The cloud refers to the practice using a network of remote servers hosted on the internet to store, manage, and process data, rather than a local server or personal computer located on the user’s premises. Cloud servers are located within DC warehouse structures. An increased focus by corporates on IT cost and efficiency management may accelerate this well-established trend.

GenAI and ML require larger DC locations for graphics processing units (GPU)-heavy clusters, greater business-to-consumer (B2C) demand, and lower latency (the delay before a transfer of data begins following an instruction for its transfer). In addition, GenAI drives far greater data processing and storage needs, which means far greater electricity needs to cool down higher density computing capacity. GenAI workloads can be split into model training and model inference. GenAI training is the process that enables AI models to make accurate inferences. GenAI inference is when an AI model produces predictions or conclusions. AI compute is also powering industrial robots, big data analytics and the internet of things (IoT), managing, analysing and acting on tremendous amounts of data from a variety of sensors. Global DC infrastructure leader, Vertiv, estimates that GenAI could boost underlying cloud growth rates by +50% per annum if sufficient power and compute capacity (AI chips are challenging to get hold of) can be sourced.

Data sovereignty, the need to retain data within a legal jurisdiction, may contribute to growth in DC development outside the US. For example, at present, just 20-30% of European data is processed and stored in Europe, with the remainder US-based. Potential legal framework changes could drive increased near-shoring of European data, and therefore higher European growth rates.

While there are many types of DCs, most modern DCs are for colocation or hyperscale users. Colocation (Colo) data centres are large DCs that rent out rack space to many third parties for their services or other network equipment. The use case for each hinges on factors such as cost, compliance, redundancy, scalability, security and availability. Colo service is used by businesses that may not have the resources to maintain their own DC but want the benefits of affordability, control and scalability. Servers and other equipment from many different companies are ‘co-located’ in one data centre. The hardware is usually owned by the companies themselves, and simply housed (and sometimes maintained) by the DC staff. Colo customers may have equipment located in multiple places, which is important for users with large geographic footprints in terms of risk management and making sure computer systems are close to physical users.

A hyperscale DC is a huge data centre that houses critical compute and networking infrastructure designed and purpose-built for massive compute and data storage, with lightning-fast connectivity optimised for global traffic. Hyperscale is ideal for extensive processing demand and diverse applications – as the name implies, hyperscale is all about achieving massive scale in compute, typically for GenAI, big data or cloud computing. These facilities allow companies like Amazon, Google and Microsoft to draw on their processing power to deliver key services to customers worldwide. A hyperscale DC is big, not only in data capacity (hyperscale DCs typically house 5,000 or more servers) but also in terms of power consumption. A single hyperscale site can consume more than 50MWh annually. With access to DCs currently limited, hyperscalers may use Colo DCs to expand their physical footprints until suitable hyperscale facilities can be justified or sourced.

AI factory DCs are an emerging DC facility specifically designed to accommodate the intense computational demands of AI workloads. AI factories enable the fast, efficient automation of repetitive tasks while optimising business processes. AI factories may be used for GenAI inference, managing the enquiries and questions users pose for GenAI. These centres are likely to be closer geographically to users and may be small footprint, high density facilities.

All about the watts

Data centres require huge amounts of power to keep the valuable computer servers inside them cool. The capacity of DCs is measured in terms of megawatts of electricity consumed per hour (MWh). A megawatt equals 1,000 kilowatts, which is equal to 1 million watts. A DC rack is a large framework, usually made from steel, that houses servers and network equipment. A traditional DC compute rack uses 8-10 kilowatts (KWh) of power. A kilowatt is equal to 1,000 watts, and one kilowatt hour (KWh) refers to one hour of electricity consumed at a rate of 1,000 watts. A new Nvidia B200 GPU GenAI rack may use 120 KWh. 10-30MWh DCs are becoming the norm for Colo use, but we are seeing the emergence of 200MWh hyperscale DCs in locations where use cases (e.g. AI training), electricity grid access and land prices support their development. To put this in context, the average New Zealand household uses approximately 7MW (7,000 kwh) of electricity per annum.

The greater the density of racks in a DC server hall or the greater the amount of compute power on a rack (e.g. more hot-running GPUs versus CPUs) the greater the amount of heat. Optimal operating temperature for a computer server is 19-26 degrees Celsius. To keep the large racks of servers cool, DCs blow air through the server halls. GenAI workloads will therefore demand greater power densities. While some of this density may be able to be spread around existing DCs, creating hot spots, it is likely that GenAI power densities will be addressed by future footprint expansion. As the amount of compute power goes up per rack with the introduction of more GPUs for AI and big data use, air cooling may no longer be sufficient to maintain optimal operating temperatures and liquid cooling may be needed to better dissipate heat.

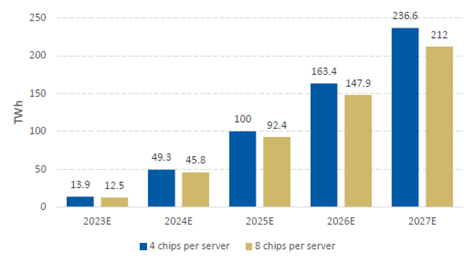

Research by global investment bank Morgan Stanley indicates global power usage from GenAI could show a rapid 68% compound annual growth rate over 2024-2027, lifting from 45.8-49.3TWh (a TWh being 1 million MWh) in 2024, to potentially 212-236.6TWh in 2027 (Figure 1). To put this into context, a country such as Spain would consume around 224TWh of power per year. This range depends on assumptions made around processing chips per server - more chips reduce power needs.

Figure 1: Morgan Stanley’s base case forecast global projected GenAI power demand

Source: Company data, TrendForce, Morgan Stanley Research estimates

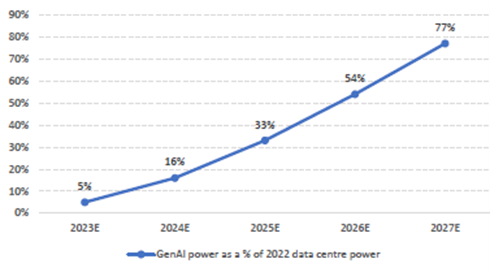

Morgan Stanley’s forecasts suggest that GenAI alone could drive incremental power demand equivalent to 77% of current data centre capacity by 2027 (Figure 2), equivalent to an additional 1.1% to global total power demand from 0.1% currently.

Figure 2: In Morgan Stanley’s base case, 2027 GenAI power demand is equal to 77% of 2022 data centre power usage:

Source: Company data, TrendForce, Morgan Stanley Research estimates

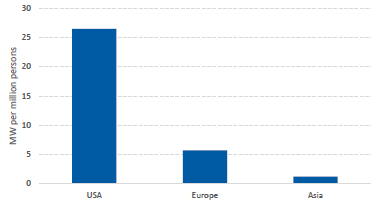

We see potential for a faster pace of DC development in New Zealand and Australia driven by data sovereignty/data onshoring requirements, better electricity availability than other parts of the world and the potential for northern hemisphere GenAI-use data to be housed and trained in geopolitically stable southern hemisphere locations. Data centre space penetration in Asia Pacific is low when compared to the more mature US market. While Sydney is now one of the top 10 global DC locations, Asia Pacific DC penetration in terms of MWh per million persons at approximately 2MW is only a tenth of the US market (figure 3), which itself is set to double in size over the next few years.

Figure 3: DC penetration - MW per million persons (population)

Source: Morgan Stanley Research

America itself is running out of power as DC growth accelerates. As reported by The Washington Post, America is experiencing a sharp surge in demand for electricity, driven by the rapid growth of power-hungry DCs and clean-tech manufacturing facilities. This surge is straining the nation's power grid, prompting utilities and regulators to seek innovative solutions to meet the escalating energy needs.

What makes a good DC location?

Power/electricity, planning/zoning, access to fibre with low latency, the location of customers and land prices influence what makes a good data centre location.

Access to a reliable electricity network is important to DC locations – reliability, resilience and uptime are key to DC users. It’s very hard to build long-distance transmission lines quickly to move power around from generation to use and hard-to-build electricity generation plants. And so, land with power is key. The unprecedented scale of demand, driven not just by the likes of hyperscalers wanting larger data centres, but also at the property level where power requirements have the potential to triple with GenAI and ML uses, means land with existing access to power networks at scale is in hot demand.

Traditional industrial property locations often have significant electrical power infrastructure in place to support historic manufacturing and industrial use. Such industrial real estate assets often offer good proximity to electricity substations and electricity networks, have good transport access and land values are reasonable for use as a DC. In the right locations, this power infrastructure is of higher value to the DC industry than any industrial manufacturing or logistics infrastructure, providing the potential for a value uplift from conversion from industrial use to DC use.

However, not just any land with access to power makes the cut for a DC use. Connection to data cables, including subsea cables, with significant capacity and low latency is crucial. DC centres need to have low natural hazard risk (e.g. low risk of floods, seismic activity, or bushfire impact) and human hazard risks (e.g. not on aircraft flight paths and have high levels of security). Cloud deployment may favour more inner-metropolitan cluster locations. AI training can be further out as it tends to be less latency sensitive. In the US, large AI requirements which are less latency sensitive seek cheap power and land values.

While DC demand is increasing, DC supply is likely to lag demand, supporting the value of existing modern DC assets. Even when an ideal site is discovered, getting approval to develop a site may take more than 12 months and build time may take an additional one to two years. In the near term, getting access to the technology (particularly GPUs) that drives applications is also a limiting factor.

Where are the DC investment opportunities for real estate investors?

What’s the investment opportunity for owners of land which may be suitable for a DC? DC’s may represent a higher and better use than traditional industrial use, offering an attractive value uplift for real estate owners with land suitable for DC developments. Landowners have a range of options to realise value in the existing land banks which may be suitable for DC use including selling land parcels to third parties for development, constructing powered shells which are leased to operators and developing turnkey fully operating data centres with partners.

Land sale with secured power to an operator provides a quick, potentially lower, return option for landowners. DC land cost can be 10%-30% of the end value of a completed DC, which represents a significant uplift (in some case more than doubling) in value against existing traditional land uses. The sale of land outright may be a lower return opportunity across a broad portfolio of assets but may be the best use for specific assets in specific countries.

The development of DC facilities may be an attractive option for some real estate owners given DC development margins can be double the margin of traditional industrial real estate developments. DC development margins are estimated at circa 60-80% creating higher return on equity versus traditional warehouse development margins of circa 35-45%, but data warehouses require approximately two times more build time than traditional warehouses.

Constructing a powered shell, delivering the external building fully powered for users to fit out, may generate a cash yield on cost above 7%, rerating to a 5% capitalisation rate (real estate discount rates which consider movements in 10-year bond yields and growth prospects in each market) with average rental growth of circa 4%. Powered shell lease terms tend be long at 15 years plus rights of renewal, leases tend to be triple net with users meeting all operating costs, and per-square-metre rental is at a premium to traditional land use, reflecting the access to electricity networks (for example powered shell DC rent in Sydney is currently $500+/per square metre that compares to industrial warehouse rentals closer to $225/per square metre).

Turnkey assets include the installation of internal cooling, racking and compute capacity and customer contracts are charged on a per KW per month basis. Turnkey DC production with external capital investors and hyperscale partners may cost 3-4 times more than only investing in a powered shell. Such assets are valued on a perpetual capitalisation rate with current capitalisation rates of approximately 5.5%- 6%. For a real estate investor, investing in such turnkey data warehouse infrastructure outright on a perpetual capitalisation or yield basis is challenging in terms of returns due to the depreciation of data centre infrastructure. To make such turnkey developments viable, real estate owners would need to consider partnering with hyperscale clients. Historically, DC operators have wanted full autonomy and control of assets but, as suitable land gets more expensive and difficult to get, they are increasingly considering lease models. DC operators’ core business is not real estate but data management. In the US, we are seeing DC operators that own physical real estate selling stabilised/mature DCs or putting assets into capital partnerships to help fund additional DC capacity.

Data centres are becoming increasingly expensive to deliver, with many telcos, cloud providers and hyperscalers undercapitalised versus the capital investment required to construct and fit out the centres. For example, a fully operating mega DC campus (200 MW) with AI capabilities could cost US$10bn+ (NZD$16.5bn+), comprising real estate expense of circa US$2bn (NZD$3.6bn, assuming build cost of US$10m per MW), plus hardware cost including heating, ventilation, air conditioning, electrical infrastructure and compute power of US$8-9bn (assuming 30-40k AI chips at US$20-30k each). A 200MW asset may need more than 75,000 square metres (sqm) of space. To put that in context, the bowl of the Wellington Regional Stadium is 48,000 sqm.

The complexity to a deliver a DC solution is increasing (planning, land, power, suppliers, etc) and the urgency of demand plays to the strengths of established developers. Institutional investor capital is increasingly looking to invest in these long-term digital infrastructure opportunities. Trusted fund managers with established relationships may be better placed to provide a solution for all parties.

Hot but non-linear future

Investing in data centres offers a potentially attractive, risk-adjusted return option to invest in the infrastructure that is driving the digital revolution. While the DC megatrend is established, it is an evolving ecosystem, with researchers highlighting we are only in the first generation of the AI evolution. The rapid growth in GenAI and ML, and their higher density of compute power requires significantly more electricity to cool the compute racks than current DC users, significantly increasing the need for DC capacity.

The rate of technology change means we investors may be wrong in our assumptions about DC demand – as with most technology change, investors may overestimate the impact of change in the near term and underestimate the longer-term impact of the change. While technology change will improve the energy sustainability of DCs, it is likely that well-located land with industrial strength access to electricity networks and data cables will be attractive. Our research suggests there is attractive long-term sustainable demand growth for DCs, but there will be uncertainty as to the timing of growth, depending upon customer power density requirements and usage levels.

At Harbour, we are investing in a range of companies that may benefit from the growth in DC demand, including industrial landowners Goodman Group and Goodman Property, and Infratil, part-owner of Canberra Data Centres. The share prices of some DC-related companies have risen significantly over the last few years meaning investors need to be selective when investing in the sector. In our opinion, the DC megatrend tailwind may remain supportive for returns to equity investors over time.

IMPORTANT NOTICE AND DISCLAIMER

This publication is provided for general information purposes only. The information provided is not intended to be financial advice. The information provided is given in good faith and has been prepared from sources believed to be accurate and complete as at the date of issue, but such information may be subject to change. Past performance is not indicative of future results and no representation is made regarding future performance of the Funds. No person guarantees the performance of any funds managed by Harbour Asset Management Limited.

Harbour Asset Management Limited (Harbour) is the issuer of the Harbour Investment Funds. A copy of the Product Disclosure Statement is available at https://www.harbourasset.co.nz/our-funds/investor-documents/. Harbour is also the issuer of Hunter Investment Funds (Hunter). A copy of the relevant Product Disclosure Statement is available at https://hunterinvestments.co.nz/resources/. Please find our quarterly Fund updates, which contain returns and total fees during the previous year on those Harbour and Hunter websites. Harbour also manages wholesale unit trusts. To invest as a wholesale investor, investors must fit the criteria as set out in the Financial Markets Conduct Act 2013.